Room-scale versus stationary experience in virtual reality

Room-scale refers to the ability of a user to freely walk around the play area of a virtual reality experience, with his real-life movements tracked into the digital environment. For first-generation virtual reality devices, this will require extra equipment outside of the headset, such as infrared sensors or cameras, to monitor the user’s movement in 3D space.Want to stroll over to the school of fish swimming around you underwater? Crawl around on the floor of your virtual spaceship chasing after your robot dog? Walk around and explore every inch of a 3D replica of Michelangelo’s David? Provided your real-world physical space has room for you to do so, you can do these things in a room-scale experience.

Although most of the first-generation virtual reality devices require external devices to provide a room-scale experience, this is quickly changing in many second-generation devices, which utilize inside-out tracking.

A stationary experience, on the other hand, is just what is sounds like: a virtual reality experience where the experience is designed around the user remaining seated or standing in a single location for the bulk of the experience. Currently, the higher-end virtual reality devices (such as the Vive, Rift, or WinMR headsets) allow for room-scale experiences, while the lower-end, mobile-based experiences do not.Room-scale experiences can feel much more immersive than stationary experiences, because a user’s movement is translated into their digital environment. If a user wants to walk across the digital room, she simply walks across the physical room. If she wants to reach under a table, she simply squats down in the physical world and reaches under the table. Doing the same in a stationary experience would require movement via a joystick or similar hardware, which pulls the user out of the experience and makes it feel less immersive. In the real world, we experience our reality through movement in physical space; the virtual reality experiences that allow that physical movement go a long way toward feeling more “real.”

Room-scale is not without its own set of drawbacks. Room-scale experiences can require a fairly large empty physical space if a user wants to walk around in virtual reality without bumping into physical obstacles. Having entire rooms of empty space dedicated to virtual reality setups in homes isn’t practical for most of us — though there are various tricks that developers can use to combat this lack of space.

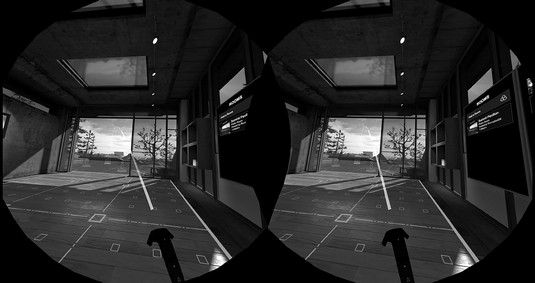

Room-scale digital experiences must also include barriers indicating where real-world physical barriers exist, to prevent users from running into doors and walls, displaying the boundary in the digital world of where the physical-world boundaries exist.The image below illustrates how the HTC Vive headset currently addresses this issue. When a user in headset wanders too close to a real-life barrier (as defined during room setup), a dashed-line green “hologram wall” alerts the user of the obstacle. It isn’t a perfect solution, but considering the challenges of movement in virtual reality, it works well enough for this generation of headsets. Perhaps a few generations down the road, headsets will be able to detect real-world obstacles automatically and flag them in the digital world.

The “hologram wall” border as seen in HTC Vive.

The “hologram wall” border as seen in HTC Vive.Many room-scale virtual reality experiences also require users to travel distances far greater than their physical space can accommodate. The solution for traveling in stationary experiences is generally a simple choice. Because a user can’t physically move about in her stationary experiences, either the entire experience takes place in a single location or a different method of locomotion is used throughout (for example, using a controller to move a character in a video game).

Room-scale experiences introduce a different set of issues. A user can now move around in the virtual world, but only the distance allowed by each user’s unique physical setup. Some users may be able to physically walk a distance of 20 feet in virtual reality. Other users’ physical virtual reality play areas may be tight, and they may only have 7 feet of space to physically walk that can be emulated in the virtual environment.

Virtual reality developers now have some tough choices to make regarding how to enable the user to move around in both the physical and virtual environments. What happens if the user needs to reach an area slightly outside of the usable physical space in the room? Or around the block? Or miles away?

If a user needs to travel across the room to pick up an item, in room-scale virtual reality he may be able to simply walk to the object. If he needs to travel a great distance in room-scale, however, issues start to arise. In these instances, developers need to determine when to let a user physically move to close-proximity objects, but also when to help a user reach objects farther away. These problems are solvable, but with virtual reality still in its relative infancy, the best practices for virtual reality developers regarding these solutions are still being experimented with.

Virtual reality devices: Inside-out tracking

Currently only the higher-end consumer headsets offer room-scale experiences. These high-end headsets typically require a wired connection to a computer, and users often end up awkwardly stepping over wires as they move about in room-scale. This wired problem is generally twofold: Wiring is required for the image display within the headset, and wiring is required for tracking the headset in physical space.Headset manufacturers have been trying to solve this wired display issue, and many of the second generation of virtual reality headsets are being developed with wireless solutions in mind. In the meantime, companies such as DisplayLink and TPCast are also researching ways to stream video to a headset without the need for a wired connection.

On the tracking side, both the Vive and the Rift are currently limited by their externally-based outside-in tracking systems, where the headset and controllers are tracked via an external device.

Outside of the headset, additional hardware (called sensors or lighthouses for Rift and Vive, respectively) are placed around the room where the user will be moving around while in virtual reality space. These sensors are separate from the headset itself. Placing them about the room allows for extremely accurate tracking of the user’s headset and controllers in 3D space, but users are limited to movement within the sensor’s field of view. When the user moves outside that space, tracking is lost.

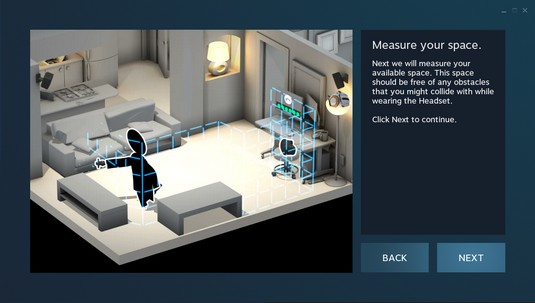

The image below shows the setup for the first-generation HTC Vive, which requires you to mount lighthouses around the space you want to track. You then define your “playable” space by dragging the controllers around the available area (which must be within visible range of the lighthouses). This process defines the area you’re able to move in. Many first-generation room-scale headsets handle their space definition similarly.

HTC Vive room setup.

HTC Vive room setup.In contrast, inside-out tracking places the sensors within the headset itself, removing the need for external tracking sensors. It relies on the headset to interpret depth and acceleration cues from the real-world environment in order to coordinate the user’s movement in virtual reality. Windows Mixed Reality headsets currently utilize inside-out tracking.

Inside-out tracking has been a “holy grail” for virtual reality; removing the need for external sensors means users may no longer need to be confined to a small area for movement. Just like every technology choice, however, it comes at a cost. Currently, inside-out tracking provides less accurate environmental tracking and suffer from other drawbacks, such as losing track of the controllers if they travel too far out of line-of-sight of the headset.

However, manufacturers are focused on ironing out these issues, with many second-generation headsets utilizing inside-out tracking for physical movement in virtual reality. Inside-out tracking may not fully relieve you of having to define a “playable” space for virtual reality. You still will need a method of defining what area is clear for you to move about in. However, solid inside-out tracking will allow for wireless headsets without external sensors, a big leap for the next generation of virtual reality.Even though most high-end first-generation virtual reality headsets still require tethering to a computer or external sensors, companies are finding creative ways to work around these issues. Companies such as the VOID have implemented their own innovative solutions that offer a glimpse of what sort of experiences a fully self-contained virtual reality headset could offer. The VOID is a location-based virtual reality company offering what they term hyper-reality, allowing users to interact with digital elements in a physical way.

The cornerstone of the VOID’s technology is their backpack virtual reality system. The backpack/headset/virtual gun system allows the VOID to map out entire warehouses worth of physical space and overlay it with a one-to-one digital environment of the physical space. The possibilities this creates are endless. Where there is a plain door in the real world, the VOID can create a corresponding digital door oozing with slime and vines. What might be a nondescript gray box in the real world can become an ancient oil lamp to light the user’s way through the fully digital experience.

The backpack form factor the VOID currently utilizes is likely not one that will see success at a mass consumer scale. It’s cumbersome, expensive, and likely too complex to serve a mass audience. However, for the location-based experiences the VOID provides, it works well and gives a glimpse of the level of immersion virtual reality could offer once untethered from cords and cables.

Both Vive and Rift appear to be gearing up to ship wireless headsets as early as 2018, with both the HTC Vive Focus (already released in China) and Oculus’s upcoming Santa Cruz developer kits utilizing inside-out tracking.

Haptic feedback in virtual reality devices

Haptic feedback, which is the sense of touch designed to provide information to the end user, is already built into a number of existing virtual reality controllers. The Xbox One controller, the HTC Vive Wands, and the Oculus Touch controllers all have the option to rumble/vibrate to provide the user some contextual information: You’re picking an item up. You’re pressing a button. You’ve closed a door.However, the feedback these controllers provide is limited. The feedback these devices provide is similar to your mobile device vibrating when it receives a notification. Although it’s a nice first step and better than no feedback whatsoever, the industry needs to push haptics much further to truly simulate the physical world while inside the virtual. There are a number of companies looking to solve the issue of touch within virtual reality.

Go Touch VR has developed a virtual reality touch system to be worn on one or more fingers to simulate physical touch in virtual reality. The Go Touch VR is little more than a device that straps to the ends of your fingers and pushes with various levels of force against your fingertips. Go Touch VR claims that the device can generate a surprisingly realistic sensation of grabbing a physical object in the digital world.

Other companies, such as Tactical Haptics, are looking to solve the haptic feedback problem within the controller. Using a series of sliding plates in the surface handle of their Reactive Grip controller, they claim to be able to simulate the types of friction forces you would feel when interacting with physical objects.

When hitting a ball with a tennis racquet, you would feel the racquet push down against your grip. When moving heavy objects, you would feel greater force pushing against your hand than when moving lighter objects. When painting with a brush, you would feel the brush pull against your hand as if you were dragging it over paper or canvas. Tactical Haptics claims to be able to emulate each of these scenarios far more precisely than the simple vibration most controllers currently allow.

On the far end of the scale of haptics in virtual reality are companies such as HaptX and bHaptics, developing full-blown haptic gloves, vests, suits, and exoskeletons.

bHaptics is currently developing the wireless TactSuit. The TactSuit includes a haptic mask, haptic vest, and haptic sleeves. Powered by eccentric rotating mass vibration motors, it distributes these vibration elements over the face, front and back of the vest, and sleeves. According to bHaptics, this allows for a much more refined immersive experience, allowing users to “feel” the sensation of an explosion, of a weapon recoil, or the sensation of being punched in the chest.

HaptX is one of the companies exploring the farthest reaches of haptics in virtual reality with its HaptX platform. HaptX is creating smart textiles to allow you to feel texture, temperature, and shape of objects. It’s currently prototyping a haptic glove to take virtual input and apply realistic touch and force feedback to virtual reality. But HaptX takes a step beyond the standard vibrating points of most haptic hardware. HaptX has invented a textile that pushes against a user’s skin via embedded microfluidic air channels that can provide force feedback to the end user.

HaptX claims that its use of technology provides a far superior experience to those devices that only incorporate vibration to simulate haptics. When combined with the visuals of virtual reality, HaptX’s system takes users a step closer to fully immersive virtual reality experiences. HaptX’s system could carry through its technology to the realization of a full-body haptic platform, allowing you to truly feel virtual reality. This image shows an example of HaptX’s latest glove prototype for virtual reality.

Courtesy of HaptX

Courtesy of HaptXHaptX VR gloves.