Developers are still defining the design principles that will help guide the AR field forward. The field is still very young, so these best practices are not set in stone. That makes AR an exciting field to be working in! It’s akin to the early Internet days, where no one was quite sure what would work well and what would fall on its face. Experimenting is encouraged, and you may even find yourself designing a way of navigating in AR that could become the standard that millions of people will use every day!

Eventually a strong set of standards will emerge for AR. In the meantime, a number of patterns are beginning to emerge around AR experiences that can guide your design process.

Starting up your AR app

For many users, AR experiences are still new territory. When using a standard computer application, videogame, or mobile app, many users can get by with minimal instruction due to their familiarity with similar applications. However, that is not the case for AR experiences. You can’t simply drop users into your AR application with no context — this may be the very first AR experience they’ve ever used. Make sure to guide users with very clear and direct cues on how to use the application on initial startup. Consider holding back on opening up deeper functionality within your application until a user has exhibited some proficiency with the simpler parts of your application.Many AR experiences evaluate the user’s surroundings in order to map digital holograms in the real world. The camera on the AR device needs to see the environment and use this input to determine where AR holograms can appear. This orientation process can take some time, especially on mobile devices, and can often be facilitated by encouraging a user to explore his surroundings with his device.

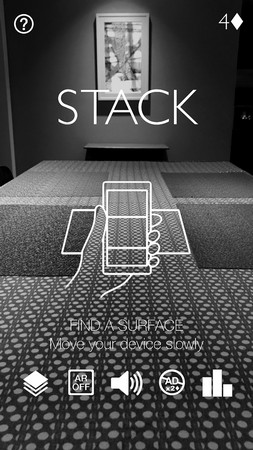

In order for users to avoid wondering whether the app is frozen while this mapping occurs, be sure to show an indication that a process is taking place, and potentially invite the user to explore her surroundings or look for a surface to place the AR experience. Consider displaying an onscreen message to the user instructing her to look around her environment. This image displays a screenshot from the iOS game Stack AR, instructing a user to move her device around her environment.

Stack AR instructing a user to move camera around the environment.

Stack AR instructing a user to move camera around the environment.Most AR applications map the real world via a computational process called simultaneous localization and mapping (SLAM). This process refers to constructing and updating a map of an unknown environment, and tracking a user’s location within that environment.

If your application requires a user to move about in the real world, think about introducing movement gradually. Users should be given time to adapt to the AR world you’ve established before they begin to move around. If motion is required, it can be a good idea to guide the user through it on the first occurrence via arrows or text callouts instructing him to move to certain areas or explore the holograms.Similar to VR applications, it’s important that AR applications run smoothly in order to maintain the immersion of augmented holograms existing within the real-world environment. Your application should maintain a consistent 60 frames per second (fps) frame rate. This means you need to make sure your application is optimized as much as possible. Graphics, animations, scripts, and 3D models all affect the potential frame rate of your application. For example, you should aim for the highest-quality 3D models you can create while keeping the polygon count of those models as low as possible.

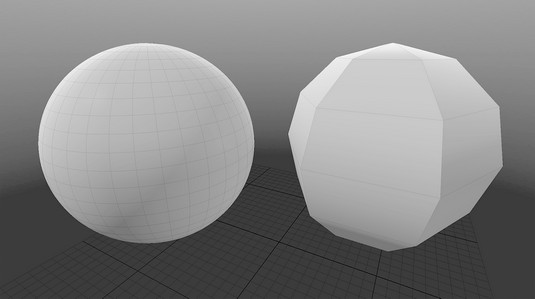

3D models are made up of polygons. In general, the higher polygon count of a model, the smoother and more realistic those models will be. A lower polygon count typically means a “blockier” model that may look less realistic. Finding the balance between realistic models while keeping polygon counts low is an art form perfected by many game designers. The lower the polygon count of a model, the more performant that model will likely be.

The image below shows an example of a 3D sphere with a high polygon count and a low polygon count. Note the difference in smoothness between the high-polygon model and the low-polygon model. High-poly versus low-poly sphere models.

High-poly versus low-poly sphere models.

Similarly, ensure that the textures (or images) used in your application are optimized. Large images can cause a performance hit on your application, so do what you can to ensure that image sizes are small and the images themselves have been optimized. AR software has to perform a number of calculations that can put stress on the processor. The better you can optimize your models, graphics, scripts, and animations, the better the frame rate you’ll achieve.

AR app design: Considering the environment

AR is all about merging the real world and the digital. Unfortunately, this can mean relinquishing control of the background environment in which your applications will be displayed. This is a far different experience than in VR, where you completely control every aspect of the environment. This lack of control over the AR environment can be a difficult problem to tackle, so it’s vital to keep in mind issues that may arise over any unpredictable environments your application may be used in.Lighting plays an important part in the AR experience. Because a user’s environment essentially becomes the world your AR models will inhabit, it’s important that they react accordingly. For most AR experiences, a moderately lit environment will typically perform best. A very bright room such as direct sunlight can make tracking difficult and wash out the display on some AR devices. A very dark room can also make AR tracking difficult while potentially eliminating some of the contrast of headset-based AR displays.

Many of the current AR headsets (for example, Meta 2 and HoloLens) use projections for display, so they won’t completely obscure physical objects; instead, the digital holograms appear as semitransparent on top of them.

AR is all about digital holograms existing in the environment with the user. As such, most AR usage is predicated on the user being able to move about their physical space. However, your applications could be used in real-world spaces where a user may not have the ability to move around. Consider how your application is intended to be used, and ensure that you’ve taken the potential mobility issues of your users into account. Think about keeping all major interactions for your application within arm’s reach of your users, and plan how to handle situations requiring interaction with a hologram out of the user’s reach.

In the real world, objects provide us with depth cues to determine just where an object is in 3D space in relation to ourselves. AR objects are little more than graphics either projected in front of the real world or being displayed on top of a video feed of the real world. As such, you need to create your own depth cues for these graphics to assist users in knowing where these holograms are meant to exist in space. Consider how to visually make your holograms appear to exist in the real-world 3D space with occlusion, lighting, and shadow.

Occlusion in computer graphics typically refers to objects that appear either partially or completely behind other graphics closer to the user in 3D space. Occlusion can help a user determine where items are in 3D space in relation to one another.

You can see an example of occlusion (foreground cubes partially blocking the visibility of the background cubes), lighting, and shadow all at play in the image below. The depth cues of occlusion, lighting, and shadow all play a part in giving the user a sense of where the holograms “exist” in space, as well as making the holographic illusion feel more real, as if the cubes actually exist in the real world, and not just the virtual.

3D holographic cubes within the real world.

3D holographic cubes within the real world.